OpenStack Ocata Deployment with OpenStack-Ansible using Ceph

In this tutorial, we will deploy from zero a new OpenStack using OpenStack-Ansible. The purpose is to document all the steps that we did and the issues that we faced. We assume that you already have Ceph installed and configured, for Ceph deployment you can use https://github.com/ceph/ceph-ansible This tutorial is based on https://docs.openstack.org/project-deploy-guide/openstack-ansible/ocata/

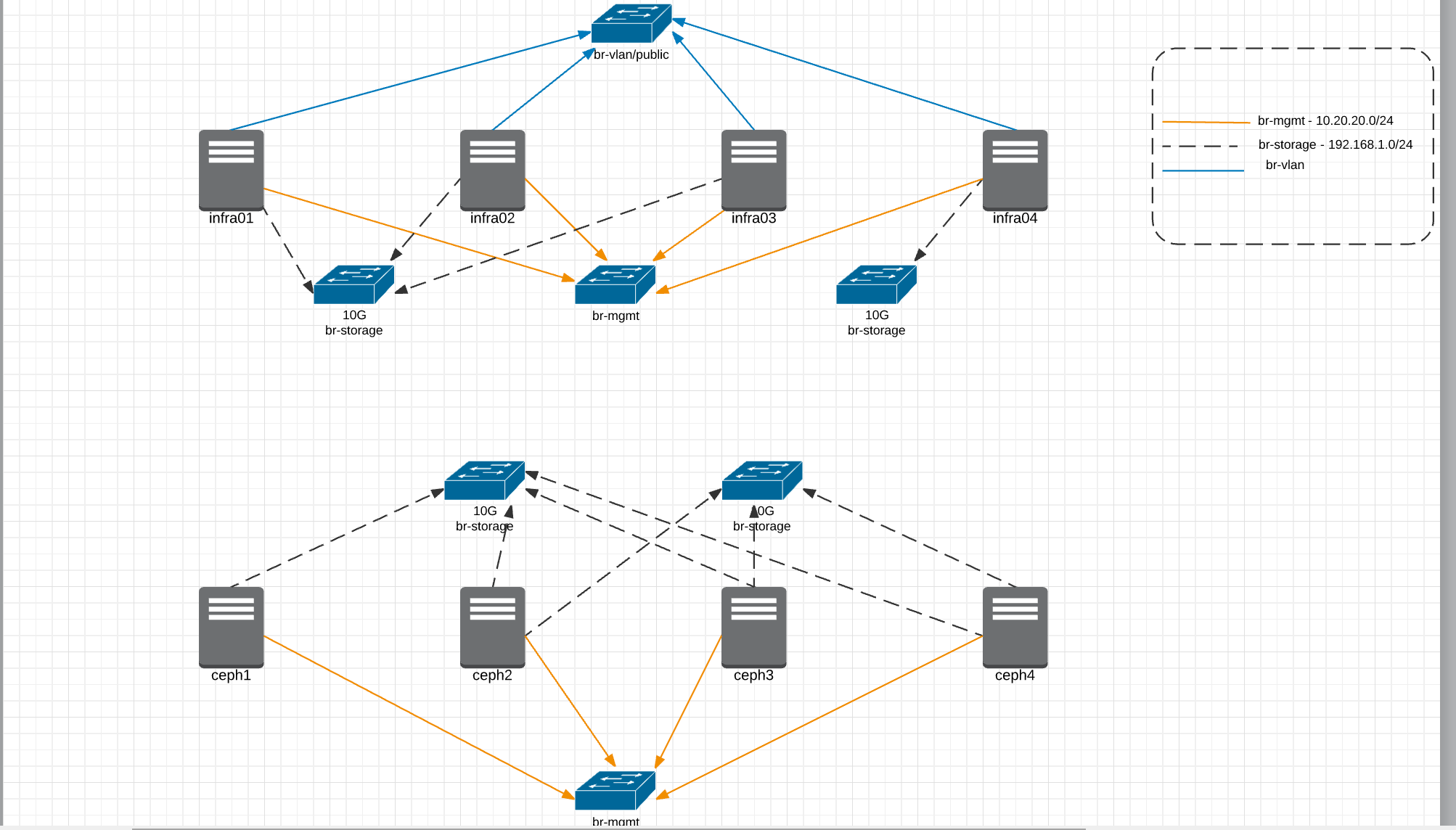

Our stack

- 4 compute/infra nodes

- 4 ceph nodes

- OS: Ubuntu 16.04

- Kernel: 4.4.0-83-generic

- Deployment Host: compute1

1. Prepare the deployment host, in our case compute1

apt-get update && apt-get dist-upgrade -y

apt-get install -y aptitude build-essential git ntp ntpdate openssh-server python-dev sudo

Configure NTP and reboot the server

2. Configure the network

For more information, you should check https://docs.openstack.org/project-deploy-guide/openstack-ansible/ocata/overview-network-arch.html

Below the file /etc/network/interfaces from compute1 as an example:

auto lo

iface lo inet loopback

dns-nameservers 172.31.7.243

dns-search maas

auto eno1

iface eno1 inet manual

mtu 1500

auto eno2

iface eno2 inet manual

mtu 1500

auto ens15

iface ens15 inet manual

bond-master bond0

mtu 9000

auto ens15d1

iface ens15d1 inet manual

bond-master bond0

mtu 9000

auto bond0

iface bond0 inet manual

bond-lacp-rate 1

mtu 9000

bond-mode 802.3ad

bond-miimon 100

bond-slaves none

bond-xmit_hash_policy layer3+4

auto bond0.100

iface bond0.100 inet manual

vlan-raw-device bond0

auto br-mgmt

iface br-mgmt inet static

address 10.20.20.10/24

gateway 10.20.20.2

bridge_fd 15

bridge_ports eno2

auto br-storage

iface br-storage inet static

address 192.168.1.10

netmask 255.255.255.0

bridge_fd 15

mtu 9000

bridge_ports bond0

auto br-vlan

iface br-vlan inet manual

bridge_fd 15

mtu 1500

bridge_ports eno1

auto br-vxlan

iface br-vxlan inet static

address 10.30.30.10

netmask 255.255.255.0

bridge_fd 15

mtu 9000

bridge_ports bond0.100

source /etc/network/interfaces.d/*.cfg

3. Install source and dependencies

git clone -b 15.1.6 https://git.openstack.org/openstack/openstack-ansible /opt/openstack-ansible

/opt/openstack-ansible/scripts/bootstrap-ansible.sh

4. Configure SSH keys

Generate an SSH key on compute1 and copy to all hosts

5. Prepare target hosts

apt-get update

apt-get dist-upgrade -y

apt-get install -y bridge-utils debootstrap ifenslave ifenslave-2.6 lsof lvm2 ntp ntpdate openssh-server sudo tcpdump vlan

echo 'bonding' >> /etc/modules

echo '8021q' >> /etc/modules

service ntp restart

6. Configure Storage

In our case Ceph was already installed with ceph-ansible, below our pools configured:

root@ceph1:~# rados lspools

cinder-volumes

.rgw.root

default.rgw.control

default.rgw.data.root

default.rgw.gc

default.rgw.log

cinder-backup

ephemeral-vms

glance-images

default.rgw.users.uid

7. Playbook time

Copy the contents of the /opt/openstack-ansible/etc/openstack_deploy directory to the /etc/openstack_deploy directory.

cp -rp /opt/openstack-ansible/etc/openstack_deploy /etc/openstack_deploy

cp /etc/openstack_deploy/openstack_user_config.yml.example /etc/openstack_deploy/openstack_user_config.yml

7.1 Service credentials

cd /opt/openstack-ansible/scripts

python pw-token-gen.py --file /etc/openstack_deploy/user_secrets.yml

7.1.1 Configuration files

Below the user_variables.yml and openstack_user_config.yml that we used for this deploy with Ceph.

user_variables.yml

---

# Copyright 2014, Rackspace US, Inc.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

###

### This file contains commonly used overrides for convenience. Please inspect

### the defaults for each role to find additional override options.

###

## Debug and Verbose options.

debug: true

## Common Glance Overrides

# Set glance_default_store to "swift" if using Cloud Files backend

# or "rbd" if using ceph backend; the latter will trigger ceph to get

# installed on glance. If using a file store, a shared file store is

# recommended. See the OpenStack-Ansible install guide and the OpenStack

# documentation for more details.

# Note that "swift" is automatically set as the default back-end if there

# are any swift hosts in the environment. Use this setting to override

# this automation if you wish for a different default back-end.

glance_default_store: rbd

## Ceph pool name for Glance to use

glance_rbd_store_pool: glance-images

glance_rbd_store_chunk_size: 8

## Common Nova Overrides

# When nova_libvirt_images_rbd_pool is defined, ceph will be installed on nova

# hosts.

nova_libvirt_images_rbd_pool: ephemeral-vms

nova_force_config_drive: False

nova_nova_conf_overrides:

libvirt:

live_migration_uri: qemu+ssh://nova@%s/system?keyfile=/var/lib/nova/.ssh/id_rsa&no_verify=1

# If you wish to change the dhcp_domain configured for both nova and neutron

dhcp_domain: openstack.net

## Common Glance Overrides when using a Swift back-end

# By default when 'glance_default_store' is set to 'swift' the playbooks will

# expect to use the Swift back-end that is configured in the same inventory.

# If the Swift back-end is not in the same inventory (ie it is already setup

# through some other means) then these settings should be used.

#

# NOTE: Ensure that the auth version matches your authentication endpoint.

#

# NOTE: If the password for glance_swift_store_key contains a dollar sign ($),

# it must be escaped with an additional dollar sign ($$), not a backslash. For

# example, a password of "super$ecure" would need to be entered as

# "super$$ecure" below. See Launchpad Bug #1259729 for more details.

#

# glance_swift_store_auth_version: 3

# glance_swift_store_auth_address: "https://some.auth.url.com"

# glance_swift_store_user: "OPENSTACK_TENANT_ID:OPENSTACK_USER_NAME"

# glance_swift_store_key: "OPENSTACK_USER_PASSWORD"

# glance_swift_store_container: "NAME_OF_SWIFT_CONTAINER"

# glance_swift_store_region: "NAME_OF_REGION"

cinder_ceph_client: cinder

cephx: true

## Common Ceph Overrides

ceph_mons:

- 192.168.1.100

- 192.168.1.101

- 192.168.1.102

## Custom Ceph Configuration File (ceph.conf)

# By default, your deployment host will connect to one of the mons defined above to

# obtain a copy of your cluster's ceph.conf. If you prefer, uncomment ceph_conf_file

# and customise to avoid ceph.conf being copied from a mon.

#ceph_conf_file: |

# [global]

# fsid = 00000000-1111-2222-3333-444444444444

# mon_initial_members = mon1.example.local,mon2.example.local,mon3.example.local

# mon_host = 10.16.5.40,10.16.5.41,10.16.5.42

# # optionally, you can use this construct to avoid defining this list twice:

# # mon_host = {{ ceph_mons|join(',') }}

# auth_cluster_required = cephx

# auth_service_required = cephx

# By default, openstack-ansible configures all OpenStack services to talk to

# RabbitMQ over encrypted connections on port 5671. To opt-out of this default,

# set the rabbitmq_use_ssl variable to 'false'. The default setting of 'true'

# is highly recommended for securing the contents of RabbitMQ messages.

# rabbitmq_use_ssl: false

# RabbitMQ management plugin is enabled by default, the guest user has been

# removed for security reasons and a new userid 'monitoring' has been created

# with the 'monitoring' user tag. In order to modify the userid, uncomment the

# following and change 'monitoring' to your userid of choice.

# rabbitmq_monitoring_userid: monitoring

## Additional pinning generator that will allow for more packages to be pinned as you see fit.

## All pins allow for package and versions to be defined. Be careful using this as versions

## are always subject to change and updates regarding security will become your problem from this

## point on. Pinning can be done based on a package version, release, or origin. Use "*" in the

## package name to indicate that you want to pin all package to a particular constraint.

# apt_pinned_packages:

# - { package: "lxc", version: "1.0.7-0ubuntu0.1" }

# - { package: "libvirt-bin", version: "1.2.2-0ubuntu13.1.9" }

# - { package: "rabbitmq-server", origin: "www.rabbitmq.com" }

# - { package: "*", release: "MariaDB" }

## Environment variable settings

# This allows users to specify the additional environment variables to be set

# which is useful in setting where you working behind a proxy. If working behind

# a proxy It's important to always specify the scheme as "http://". This is what

# the underlying python libraries will handle best. This proxy information will be

# placed both on the hosts and inside the containers.

## Example environment variable setup:

## (1) This sets up a permanent environment, used during and after deployment:

# proxy_env_url: http://username:pa$$w0rd@10.10.10.9:9000/

# no_proxy_env: "localhost,127.0.0.1,{{ internal_lb_vip_address }},{{ external_lb_vip_address }},{% for host in groups['all_containers'] %}{{ hostvars[host]['container_address'] }}{% if not loop.last %},{% endif %}{% endfor %}"

# global_environment_variables:

# HTTP_PROXY: "{{ proxy_env_url }}"

# HTTPS_PROXY: "{{ proxy_env_url }}"

# NO_PROXY: "{{ no_proxy_env }}"

# http_proxy: "{{ proxy_env_url }}"

# https_proxy: "{{ proxy_env_url }}"

# no_proxy: "{{ no_proxy_env }}"

#

## (2) This is applied only during deployment, nothing is left after deployment is complete:

# deployment_environment_variables:

# http_proxy: http://username:pa$$w0rd@10.10.10.9:9000/

# https_proxy: http://username:pa$$w0rd@10.10.10.9:9000/

# no_proxy: "localhost,127.0.0.1,{{ internal_lb_vip_address }},{{ external_lb_vip_address }}"

## SSH connection wait time

# If an increased delay for the ssh connection check is desired,

# uncomment this variable and set it appropriately.

ssh_delay: 10

## HAProxy

# Uncomment this to disable keepalived installation (cf. documentation)

haproxy_use_keepalived: True

#

# HAProxy Keepalived configuration (cf. documentation)

# Make sure that this is set correctly according to the CIDR used for your

# internal and external addresses.

#haproxy_keepalived_external_vip_cidr: "{{external_lb_vip_address}}/32"

haproxy_keepalived_internal_vip_cidr: "10.20.20.50/24"

#haproxy_keepalived_external_interface:

haproxy_keepalived_internal_interface: br-mgmt

keepalived_use_latest_stable: True

# Defines the default VRRP id used for keepalived with haproxy.

# Overwrite it to your value to make sure you don't overlap

# with existing VRRPs id on your network. Default is 10 for the external and 11 for the

# internal VRRPs

# haproxy_keepalived_external_virtual_router_id:

# haproxy_keepalived_internal_virtual_router_id:

# Defines the VRRP master/backup priority. Defaults respectively to 100 and 20

# haproxy_keepalived_priority_master:

# haproxy_keepalived_priority_backup:

# Keepalived default IP address used to check its alive status (IPv4 only)

# keepalived_ping_address: "193.0.14.129"

# All the previous variables are used in a var file, fed to the keepalived role.

# To use another file to feed the role, override the following var:

# haproxy_keepalived_vars_file: 'vars/configs/keepalived_haproxy.yml'

openstack_service_publicuri_proto: http

openstack_external_ssl: false

haproxy_ssl: false

openstack_user_config.yml

---

cidr_networks:

container: 10.20.20.0/24

tunnel: 10.30.30.0/24

storage: 192.168.1.0/24

used_ips:

- "10.20.20.1,10.20.20.50"

- "10.20.20.100,10.20.20.150"

- "10.30.30.1,10.30.30.50"

- "10.30.30.100,10.30.30.150"

- "192.168.1.1,192.168.1.50"

- "192.168.1.100,192.168.11.50"

global_overrides:

internal_lb_vip_address: 10.20.20.50

#

# The below domain name must resolve to an IP address

# in the CIDR specified in haproxy_keepalived_external_vip_cidr.

# If using different protocols (https/http) for the public/internal

# endpoints the two addresses must be different.

#

external_lb_vip_address: vip.openstack.net

tunnel_bridge: "br-vxlan"

management_bridge: "br-mgmt"

provider_networks:

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "container"

type: "raw"

group_binds:

- all_containers

- hosts

is_container_address: true

is_ssh_address: true

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth12"

host_bind_override: "eno1"

type: "flat"

net_name: "flat"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth11"

type: "vlan"

range: "1:1"

net_name: "vlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- nova_compute

- mons

###

### Infrastructure

###

# galera, memcache, rabbitmq, utility

shared-infra_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# repository (apt cache, python packages, etc)

repo-infra_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# load balancer

# Ideally the load balancer should not use the Infrastructure hosts.

# Dedicated hardware is best for improved performance and security.

haproxy_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# rsyslog server

log_hosts:

infra4:

ip: 10.20.20.13

###

### OpenStack

###

# keystone

identity_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# cinder api services

storage-infra_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# glance

# The settings here are repeated for each infra host.

# They could instead be applied as global settings in

# user_variables, but are left here to illustrate that

# each container could have different storage targets.

image_hosts:

infra1:

ip: 10.20.20.10

# container_vars:

# limit_container_types: glance

# glance_nfs_client:

# - server: "172.29.244.15"

# remote_path: "/images"

# local_path: "/var/lib/glance/images"

# type: "nfs"

# options: "_netdev,auto"

infra2:

ip: 10.20.20.11

# container_vars:

# limit_container_types: glance

# glance_nfs_client:

# - server: "172.29.244.15"

# remote_path: "/images"

# local_path: "/var/lib/glance/images"

# type: "nfs"

# options: "_netdev,auto"

infra3:

ip: 10.20.20.12

# container_vars:

# limit_container_types: glance

# glance_nfs_client:

# - server: "172.29.244.15"

# remote_path: "/images"

# local_path: "/var/lib/glance/images"

# type: "nfs"

# options: "_netdev,auto"

# nova api, conductor, etc services

compute-infra_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# heat

orchestration_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# horizon

dashboard_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# neutron server, agents (L3, etc)

network_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# ceilometer (telemetry data collection)

metering-infra_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# aodh (telemetry alarm service)

metering-alarm_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# gnocchi (telemetry metrics storage)

metrics_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

# nova hypervisors

compute_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

infra4:

ip: 10.20.20.13

# ceilometer compute agent (telemetry data collection)

metering-compute_hosts:

infra1:

ip: 10.20.20.10

infra2:

ip: 10.20.20.11

infra3:

ip: 10.20.20.12

infra4:

ip: 10.20.20.13

# cinder volume hosts (NFS-backed)

# The settings here are repeated for each infra host.

# They could instead be applied as global settings in

# user_variables, but are left here to illustrate that

# each container could have different storage targets.

storage_hosts:

stor1:

ip: 192.168.1.100

container_vars:

cinder_backends:

limit_container_types: cinder_volume

rbd:

volume_group: cinder-volumes

volume_driver: cinder.volume.drivers.rbd.RBDDriver

volume_backend_name: rbd

rbd_pool: cinder-volumes

rbd_ceph_conf: /etc/ceph/ceph.conf

rbd_user: "{{ cinder_ceph_client }}"

rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

stor2:

ip: 192.168.1.101

container_vars:

cinder_backends:

limit_container_types: cinder_volume

rbd:

volume_group: cinder-volumes

volume_driver: cinder.volume.drivers.rbd.RBDDriver

volume_backend_name: rbd

rbd_pool: cinder-volumes

rbd_ceph_conf: /etc/ceph/ceph.conf

rbd_user: "{{ cinder_ceph_client }}"

rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

stor3:

ip: 192.168.1.102

container_vars:

cinder_backends:

limit_container_types: cinder_volume

rbd:

volume_group: cinder-volumes

volume_driver: cinder.volume.drivers.rbd.RBDDriver

volume_backend_name: rbd

rbd_pool: cinder-volumes

rbd_ceph_conf: /etc/ceph/ceph.conf

rbd_user: "{{ cinder_ceph_client }}"

rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

stor4:

ip: 192.168.1.103

container_vars:

cinder_backends:

limit_container_types: cinder_volume

rbd:

volume_group: cinder-volumes

volume_driver: cinder.volume.drivers.rbd.RBDDriver

volume_backend_name: rbd

rbd_pool: cinder-volumes

rbd_ceph_conf: /etc/ceph/ceph.conf

rbd_user: "{{ cinder_ceph_client }}"

rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

7.2 Check syntax

cd /opt/openstack-ansible/playbooks

openstack-ansible setup-infrastructure.yml --syntax-check

7.3 Setup hosts

openstack-ansible setup-hosts.yml

7.4 Deploy HAProxy

openstack-ansible haproxy-install.yml

7.5 Run setup-infrastructure

openstack-ansible setup-infrastructure.yml

7.6 Check Galera cluster status

ansible galera_container -m shell \

-a "mysql -h localhost -e 'show status like \"%wsrep_cluster_%\";'"

Good example output:

node3_galera_container-3ea2cbd3 | success | rc=0 >>

Variable_name Value

wsrep_cluster_conf_id 17

wsrep_cluster_size 3

wsrep_cluster_state_uuid 338b06b0-2948-11e4-9d06-bef42f6c52f1

wsrep_cluster_status Primary

node2_galera_container-49a47d25 | success | rc=0 >>

Variable_name Value

wsrep_cluster_conf_id 17

wsrep_cluster_size 3

wsrep_cluster_state_uuid 338b06b0-2948-11e4-9d06-bef42f6c52f1

wsrep_cluster_status Primary

node4_galera_container-76275635 | success | rc=0 >>

Variable_name Value

wsrep_cluster_conf_id 17

wsrep_cluster_size 3

wsrep_cluster_state_uuid 338b06b0-2948-11e4-9d06-bef42f6c52f1

wsrep_cluster_status Primary

7.7 Deploy Openstack

openstack-ansible setup-openstack.yml

***Confirm success with zero items unreachable or failed:***

1 PLAY RECAP *********************************************************

2 deployment_host : ok=XX changed=0 unreachable=0 failed=0

Always check if some step failed.

8.0 Verify everything

8.1 Check the containers

root@openstack1:/opt/openstack-ansible/scripts# ./inventory-manage.py -l

+------------------------------------------------+----------+--------------------------+---------------+----------------+---------------+------------------------+

| container_name | is_metal | component | physical_host | tunnel_address | ansible_host | container_types |

+------------------------------------------------+----------+--------------------------+---------------+----------------+---------------+------------------------+

| infra1_aodh_container-3425c4c7 | None | aodh_api | infra1 | None | 10.20.20.51 | None |

| infra2_aodh_container-f5657115 | None | aodh_api | infra2 | None | 10.20.20.221 | None |

| infra3_aodh_container-2b91aaa6 | None | aodh_api | infra3 | None | 10.20.20.152 | None |

| infra1_ceilometer_api_container-0eac7d0d | None | ceilometer_agent_central | infra1 | None | 10.20.20.92 | None |

| infra2_ceilometer_api_container-e17187de | None | ceilometer_agent_central | infra2 | None | 10.20.20.179 | None |

| infra3_ceilometer_api_container-586b7e39 | None | ceilometer_agent_central | infra3 | None | 10.20.20.244 | None |

| infra1 | True | ceilometer_agent_compute | infra1 | None | 10.20.20.10 | infra1-host_containers |

| infra2 | True | ceilometer_agent_compute | infra2 | None | 10.20.20.11 | infra2-host_containers |

| infra3 | True | ceilometer_agent_compute | infra3 | None | 10.20.20.12 | infra3-host_containers |

| infra4 | True | ceilometer_agent_compute | infra4 | None | 10.20.20.13 | infra4-host_containers |

| infra1_ceilometer_collector_container-8778fcfb | None | ceilometer_collector | infra1 | None | 10.20.20.191 | None |

| infra2_ceilometer_collector_container-cbb55c69 | None | ceilometer_collector | infra2 | None | 10.20.20.173 | None |

| infra3_ceilometer_collector_container-2835084a | None | ceilometer_collector | infra3 | None | 10.20.20.241 | None |

| infra1_cinder_api_container-0936e945 | None | cinder_api | infra1 | None | 10.20.20.229 | None |

| infra2_cinder_api_container-b6fe5dba | None | cinder_api | infra2 | None | 10.20.20.211 | None |

| infra3_cinder_api_container-fe0b3819 | None | cinder_api | infra3 | None | 10.20.20.238 | None |

| stor1 | True | cinder_backup | stor1 | None | 192.168.1.100 | stor1-host_containers |

| stor2 | True | cinder_backup | stor2 | None | 192.168.1.101 | stor2-host_containers |

| stor3 | True | cinder_backup | stor3 | None | 192.168.1.102 | stor3-host_containers |

| stor4 | True | cinder_backup | stor4 | None | 192.168.1.103 | stor4-host_containers |

| infra1_cinder_scheduler_container-fc83ebc4 | None | cinder_scheduler | infra1 | None | 10.20.20.79 | None |

| infra2_cinder_scheduler_container-a757f7fd | None | cinder_scheduler | infra2 | None | 10.20.20.90 | None |

| infra3_cinder_scheduler_container-141627ce | None | cinder_scheduler | infra3 | None | 10.20.20.178 | None |

| infra1_galera_container-3553e5ad | None | galera | infra1 | None | 10.20.20.55 | None |

| infra2_galera_container-e1d4feff | None | galera | infra2 | None | 10.20.20.208 | None |

| infra3_galera_container-bc9c86db | None | galera | infra3 | None | 10.20.20.240 | None |

| infra1_glance_container-0525f6a0 | None | glance_api | infra1 | None | 10.20.20.204 | None |

| infra2_glance_container-987db1c7 | None | glance_api | infra2 | None | 10.20.20.248 | None |

| infra3_glance_container-ec5c3ea9 | None | glance_api | infra3 | None | 10.20.20.220 | None |

| infra1_gnocchi_container-8b274ec4 | None | gnocchi_api | infra1 | None | 10.20.20.71 | None |

| infra2_gnocchi_container-91f1915f | None | gnocchi_api | infra2 | None | 10.20.20.234 | None |

| infra3_gnocchi_container-148bed9d | None | gnocchi_api | infra3 | None | 10.20.20.58 | None |

| infra1_heat_apis_container-1f4677cd | None | heat_api_cloudwatch | infra1 | None | 10.20.20.167 | None |

| infra2_heat_apis_container-ace23717 | None | heat_api_cloudwatch | infra2 | None | 10.20.20.214 | None |

| infra3_heat_apis_container-65685847 | None | heat_api_cloudwatch | infra3 | None | 10.20.20.60 | None |

| infra1_heat_engine_container-490a2be3 | None | heat_engine | infra1 | None | 10.20.20.96 | None |

| infra2_heat_engine_container-3b00a93c | None | heat_engine | infra2 | None | 10.20.20.192 | None |

| infra3_heat_engine_container-9890e6ca | None | heat_engine | infra3 | None | 10.20.20.52 | None |

| infra1_horizon_container-3e18da0f | None | horizon | infra1 | None | 10.20.20.87 | None |

| infra2_horizon_container-c88d4a4f | None | horizon | infra2 | None | 10.20.20.160 | None |

| infra3_horizon_container-88c85174 | None | horizon | infra3 | None | 10.20.20.63 | None |

| infra1_keystone_container-33cfd6e8 | None | keystone | infra1 | None | 10.20.20.78 | None |

| infra2_keystone_container-b01d0dfa | None | keystone | infra2 | None | 10.20.20.217 | None |

| infra3_keystone_container-0c3a530e | None | keystone | infra3 | None | 10.20.20.153 | None |

| infra1_memcached_container-823e0f5c | None | memcached | infra1 | None | 10.20.20.165 | None |

| infra2_memcached_container-b808b561 | None | memcached | infra2 | None | 10.20.20.250 | None |

| infra3_memcached_container-6b22d0b1 | None | memcached | infra3 | None | 10.20.20.166 | None |

| infra1_neutron_agents_container-2c8cfc26 | None | neutron_agent | infra1 | None | 10.20.20.72 | None |

| infra2_neutron_agents_container-da604256 | None | neutron_agent | infra2 | None | 10.20.20.202 | None |

| infra3_neutron_agents_container-baf9fc93 | None | neutron_agent | infra3 | None | 10.20.20.209 | None |

| infra1_neutron_server_container-3a820c3a | None | neutron_server | infra1 | None | 10.20.20.68 | None |

| infra2_neutron_server_container-1fb85a80 | None | neutron_server | infra2 | None | 10.20.20.235 | None |

| infra3_neutron_server_container-357febaa | None | neutron_server | infra3 | None | 10.20.20.164 | None |

| infra1_nova_api_metadata_container-45e5bd4c | None | nova_api_metadata | infra1 | None | 10.20.20.203 | None |

| infra2_nova_api_metadata_container-fbd1f355 | None | nova_api_metadata | infra2 | None | 10.20.20.227 | None |

| infra3_nova_api_metadata_container-ca3b0688 | None | nova_api_metadata | infra3 | None | 10.20.20.213 | None |

| infra1_nova_api_os_compute_container-bba3453e | None | nova_api_os_compute | infra1 | None | 10.20.20.54 | None |

| infra2_nova_api_os_compute_container-1eea962e | None | nova_api_os_compute | infra2 | None | 10.20.20.253 | None |

| infra3_nova_api_os_compute_container-22b11b8e | None | nova_api_os_compute | infra3 | None | 10.20.20.237 | None |

| infra1_nova_api_placement_container-a6256190 | None | nova_api_placement | infra1 | None | 10.20.20.243 | None |

| infra2_nova_api_placement_container-5cc21228 | None | nova_api_placement | infra2 | None | 10.20.20.86 | None |

| infra3_nova_api_placement_container-27eda8e8 | None | nova_api_placement | infra3 | None | 10.20.20.74 | None |

| infra1_nova_conductor_container-21b30762 | None | nova_conductor | infra1 | None | 10.20.20.177 | None |

| infra2_nova_conductor_container-bff97b73 | None | nova_conductor | infra2 | None | 10.20.20.246 | None |

| infra3_nova_conductor_container-d384d6eb | None | nova_conductor | infra3 | None | 10.20.20.65 | None |

| infra1_nova_console_container-86f55a54 | None | nova_console | infra1 | None | 10.20.20.206 | None |

| infra2_nova_console_container-99dbfa6e | None | nova_console | infra2 | None | 10.20.20.236 | None |

| infra3_nova_console_container-f57a1e27 | None | nova_console | infra3 | None | 10.20.20.89 | None |

| infra1_nova_scheduler_container-b84c323a | None | nova_scheduler | infra1 | None | 10.20.20.212 | None |

| infra2_nova_scheduler_container-403753e3 | None | nova_scheduler | infra2 | None | 10.20.20.99 | None |

| infra3_nova_scheduler_container-8de84033 | None | nova_scheduler | infra3 | None | 10.20.20.95 | None |

| infra1_repo_container-745bad06 | None | pkg_repo | infra1 | None | 10.20.20.70 | None |

| infra2_repo_container-495c6de1 | None | pkg_repo | infra2 | None | 10.20.20.228 | None |

| infra3_repo_container-aa03d50a | None | pkg_repo | infra3 | None | 10.20.20.94 | None |

| infra1_rabbit_mq_container-7016f414 | None | rabbitmq | infra1 | None | 10.20.20.83 | None |

| infra2_rabbit_mq_container-3a8e985a | None | rabbitmq | infra2 | None | 10.20.20.80 | None |

| infra3_rabbit_mq_container-385f0d02 | None | rabbitmq | infra3 | None | 10.20.20.201 | None |

| infra4_rsyslog_container-eeff7432 | None | rsyslog | infra4 | None | 10.20.20.75 | None |

| infra1_utility_container-836672cc | None | utility | infra1 | None | 10.20.20.170 | None |

| infra2_utility_container-31a8813b | None | utility | infra2 | None | 10.20.20.197 | None |

| infra3_utility_container-dacfde99 | None | utility | infra3 | None | 10.20.20.188 | None |

+------------------------------------------------+----------+--------------------------+---------------+----------------+---------------+------------------------+

root@openstack1:/opt/openstack-ansible/scripts#

8.1 Verify Openstack API

root@openstack1:/opt/openstack-ansible/scripts# ./inventory-manage.py -l|grep util

| infra1_utility_container-836672cc | None | utility | infra1 | None | 10.20.20.170 | None |

| infra2_utility_container-31a8813b | None | utility | infra2 | None | 10.20.20.197 | None |

| infra3_utility_container-dacfde99 | None | utility | infra3 | None | 10.20.20.188 | None |

root@openstack1:/opt/openstack-ansible/scripts#

ssh root@infra1_utility_container-836672cc

root@infra1-utility-container-836672cc:~# source

.ansible/ .bash_history .bashrc .cache/ .config/ .my.cnf openrc .pip/ .profile .ssh/

root@infra1-utility-container-836672cc:~# source openrc

root@infra1-utility-container-836672cc:~# openstack user list

+----------------------------------+--------------------+

| ID | Name |

+----------------------------------+--------------------+

| 040d6e3b67d7450d880cdb4e77509067 | glance |

| 13b0477ec4bc4a3d861257bd73f84a05 | ceilometer |

| 175cd95c55db4e4ea86699f31865ae65 | aodh |

| 4abb6d992188412399cc8dfe80cb5cb4 | keystone |

| 4f15a3c4da2440d3a6822c125fff12db | cinder |

| 6acd0a000f9049feabfd9064c5af4c0c | neutron |

| 778056b4ff8b40448b777bb423b49716 | stack_domain_admin |

| 89338cee034241f88b1e89171ed0feb4 | heat |

| 8d89b2e47e5b40efb3bc709f3271f0d1 | admin |

| aa7634cbf9e541a8b9c7140ccf4c43c8 | gnocchi |

| b9e06b4525cb4d859305235d0088c1c0 | nova |

| c32b371de0ed4f438dc28b2fe4df22ac | placement |

+----------------------------------+--------------------+

root@infra1-utility-container-836672cc:~#

root@infra1-utility-container-836672cc:~# openstack service list

+----------------------------------+------------+----------------+

| ID | Name | Type |

+----------------------------------+------------+----------------+

| 3219a914279d4ff2b249d0c2f45aad28 | heat-cfn | cloudformation |

| 3350112667864ba19936dfa2def3e8c1 | cinder | volume |

| 4688c1294f504eb8bb0cd092c58b6c19 | nova | compute |

| 5ed33166b5c1465f8b2320fd484ff249 | cinderv2 | volumev2 |

| 6b0a5cf4caf94ccf9a84f76efcc96338 | keystone | identity |

| 76947efc711a4fd1b1e59255a767b588 | cinderv3 | volumev3 |

| 7a607d25b17a46e1b35b80d6bedbe965 | gnocchi | metric |

| 7d5922e395ed4f83a3cf4f17bc0a1cd8 | glance | image |

| 812dd3096f7942e6abd66c915f8cfe0d | aodh | alarming |

| 8c780a772a434ebab511dbddf402074c | ceilometer | metering |

| ad41a628c5d245ba93ddc3380f299399 | heat | orchestration |

| c2f5b570d25c483d898dc58dea4e9e37 | placement | placement |

| f511704a7cf749abac3029c2d439fb9e | neutron | network |

+----------------------------------+------------+----------------+

root@infra1-utility-container-836672cc:~#

root@infra1-utility-container-836672cc:~# openstack endpoint list

+----------------------------------+-----------+--------------+----------------+---------+-----------+--------------------------------------------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+----------------+---------+-----------+--------------------------------------------------------------+

| 092102d3ba4a489abfcfb3c9408f6e6e | RegionOne | cinder | volume | True | public | http://openstack.net:8776/v1/%(tenant_id)s |

| 0cdfc0ef5e834fac949e9b6130c6728e | RegionOne | nova | compute | True | public | http://openstack.net:8774/v2.1/%(tenant_id)s |

| 159383c78ce9456d87d38ed2339a0246 | RegionOne | placement | placement | True | admin | http://10.20.20.50:8780/placement |

| 18eb94dbd77c40a480dd7239145bc47c | RegionOne | cinderv2 | volumev2 | True | internal | http://10.20.20.50:8776/v2/%(tenant_id)s |

| 1b2df9e4704a4882addcb8dba9539ae4 | RegionOne | keystone | identity | True | internal | http://10.20.20.50:5000/v3 |

| 1ddcaeb0663f45ce82edd37c2254980e | RegionOne | cinder | volume | True | internal | http://10.20.20.50:8776/v1/%(tenant_id)s |

| 26715c3ef1054c9584e8c4340f031a3b | RegionOne | nova | compute | True | admin | http://10.20.20.50:8774/v2.1/%(tenant_id)s |

| 2f1f99ccbf9b440ba063c70ee0022e9c | RegionOne | placement | placement | True | public | http://openstackt:8780/placement |

| 367a26c8a62c4ded889e2e7083017708 | RegionOne | neutron | network | True | internal | http://10.20.20.50:9696 |

| 3b29dc27da1a469a8f4594d95b16da36 | RegionOne | neutron | network | True | public | http://openstack.net:9696 |

| 3ee4b982f303412cbc61d35fe8cc45d2 | RegionOne | gnocchi | metric | True | internal | http://10.20.20.50:8041 |

| 465752ae7af64b1ebb65d0cdbbf26726 | RegionOne | heat | orchestration | True | admin | http://10.20.20.50:8004/v1/%(tenant_id)s |

| 4a48328a3642401d8d64af875c534c01 | RegionOne | cinderv2 | volumev2 | True | public | http://openstack.net:8776/v2/%(tenant_id)s |

| 52d37ab4c49a4c919454be10476ffe8f | RegionOne | heat | orchestration | True | internal | http://10.20.20.50:8004/v1/%(tenant_id)s |

| 57a4e0f36dae4d45ad9321946b47e6a3 | RegionOne | gnocchi | metric | True | admin | http://10.20.20.50:8041 |

| 5a365052eba8484386336b69f48defb3 | RegionOne | aodh | alarming | True | public | http://openstack.net:8042 |

| 5adc5052949e409fb3d5b8fd4c768351 | RegionOne | nova | compute | True | internal | http://10.20.20.50:8774/v2.1/%(tenant_id)s |

| 6624f0dd9e884bcb96dcaddb71c0ad9d | RegionOne | keystone | identity | True | public | http://openstack.net:5000/v3 |

| 6d30e2a1824c4a98bd163ad1ddc5d3e9 | RegionOne | placement | placement | True | internal | http://10.20.20.50:8780/placement |

| 6fcdf9bf03aa4d4b858b371bb86490e6 | RegionOne | cinderv3 | volumev3 | True | internal | http://10.20.20.50:8776/v3/%(tenant_id)s |

| 73c141fa9d9a4c8f8b0eba2d48ca4c34 | RegionOne | ceilometer | metering | True | public | http://openstack.net:8777 |

| 7bae35ecb0694beaade731b5db1e75d6 | RegionOne | cinderv3 | volumev3 | True | public | http://openstack.net:8776/v3/%(tenant_id)s |

| 824756626b1246ccbd0f9ecd5c086045 | RegionOne | heat | orchestration | True | public | http://openstack.net:8004/v1/%(tenant_id)s |

| 85db8bfcf4f14107b6993effe9014021 | RegionOne | heat-cfn | cloudformation | True | admin | http://10.20.20.50:8000/v1 |

| 8e0fcc4c280c4dadac8a789bca5e95f7 | RegionOne | aodh | alarming | True | admin | http://10.20.20.50:8042 |

| a9828ebb780e45348e294c43bdee94f2 | RegionOne | gnocchi | metric | True | public | http://openstack.net:8041 |

| a9988892a303465d8ddd42874d639849 | RegionOne | glance | image | True | internal | http://10.20.20.50:9292 |

| aa44ab02530c455f8c1a66b4cf6afd84 | RegionOne | heat-cfn | cloudformation | True | internal | http://10.20.20.50:8000/v1 |

| af53025f7f894a7bb4317f98dd3cdc58 | RegionOne | keystone | identity | True | admin | http://10.20.20.50:35357/v3 |

| b2b16f433ff842178ffa10ee35fb48f2 | RegionOne | glance | image | True | public | http://openstack.net:9292 |

| bafd2cb96b6347ebb51b672f80b180b2 | RegionOne | neutron | network | True | admin | http://10.20.20.50:9696 |

| bbb22e3dc2f74c43a2fda9b835ad52b7 | RegionOne | ceilometer | metering | True | admin | http://10.20.20.50:8777/ |

| d10c4761286541d492e7c156fa3689ae | RegionOne | glance | image | True | admin | http://10.20.20.50:9292 |

| dbd192acd5fe4049b0f87fc346fa5368 | RegionOne | cinderv3 | volumev3 | True | admin | http://10.20.20.50:8776/v3/%(tenant_id)s |

| e2d7f4369d124fd18e1e555ef0097a29 | RegionOne | cinder | volume | True | admin | http://10.20.20.50:8776/v1/%(tenant_id)s |

| ee613984c72f487fbd928c12b4a8a26a | RegionOne | ceilometer | metering | True | internal | http://10.20.20.50:8777 |

| f4bdf7fcb0884e5f88f0c8957764b538 | RegionOne | cinderv2 | volumev2 | True | admin | http://10.20.20.50:8776/v2/%(tenant_id)s |

| f52ec71b2c3d43c98679187dcabae0d2 | RegionOne | heat-cfn | cloudformation | True | public | http://openstack.net:8000/v1 |

| f9b58e818b5b44dc8903ee0a3fe5e5b1 | RegionOne | aodh | alarming | True | internal | http://10.20.20.50:8042 |

+----------------------------------+-----------+--------------+----------------+---------+-----------+--------------------------------------------------------------+

root@infra1-utility-container-836672cc:~#

9. Debugging and Troubleshooting

9.1 Logs

If you need to debug anything, all the logs are inside the Rsyslog container.

root@openstack1:/opt/openstack-ansible/scripts# ./inventory-manage.py -l|grep rsyslog

| infra4_rsyslog_container-eeff7432 | None | rsyslog | infra4 | None | 10.20.20.75 | None |

root@openstack1:/etc/openstack_deploy# ssh infra4_rsyslog_container-eeff7432

root@infra4-rsyslog-container-eeff7432:~# cd /var/log/log-storage/

root@infra4-rsyslog-container-eeff7432:/var/log/log-storage# ls

infra1 infra1.openstack.local infra2-nova-conductor-container-bff97b73 infra3-nova-api-metadata-container-ca3b0688

infra1-cinder-api-container-0936e945 infra1-rabbit-mq-container-7016f414 infra2-nova-console-container-99dbfa6e infra3-nova-api-os-compute-container-22b11b8e

infra1-cinder-scheduler-container-fc83ebc4 infra1-repo-container-745bad06 infra2-nova-scheduler-container-403753e3 infra3-nova-api-placement-container-27eda8e8

infra1-galera-container-3553e5ad infra2 infra2.openstack.local infra3-nova-conductor-container-d384d6eb

infra1-glance-container-0525f6a0 infra2-cinder-api-container-b6fe5dba infra2-rabbit-mq-container-3a8e985a infra3-nova-console-container-f57a1e27

infra1-heat-apis-container-1f4677cd infra2-cinder-scheduler-container-a757f7fd infra2-repo-container-495c6de1 infra3-nova-scheduler-container-8de84033

infra1-heat-engine-container-490a2be3 infra2-galera-container-e1d4feff infra3 infra3.openstack.local

infra1-horizon-container-3e18da0f infra2-glance-container-987db1c7 infra3-cinder-api-container-fe0b3819 infra3-rabbit-mq-container-385f0d02

infra1-keystone-container-33cfd6e8 infra2-heat-apis-container-ace23717 infra3-cinder-scheduler-container-141627ce infra3-repo-container-aa03d50a

infra1-neutron-agents-container-2c8cfc26 infra2-heat-engine-container-3b00a93c infra3-galera-container-bc9c86db infra4

infra1-neutron-server-container-3a820c3a infra2-horizon-container-c88d4a4f infra3-glance-container-ec5c3ea9 infra4.openstack.local

infra1-nova-api-metadata-container-45e5bd4c infra2-keystone-container-b01d0dfa infra3-heat-apis-container-65685847 stor1

infra1-nova-api-os-compute-container-bba3453e infra2-neutron-agents-container-da604256 infra3-heat-engine-container-9890e6ca stor2

infra1-nova-api-placement-container-a6256190 infra2-neutron-server-container-1fb85a80 infra3-horizon-container-88c85174 stor3

infra1-nova-conductor-container-21b30762 infra2-nova-api-metadata-container-fbd1f355 infra3-keystone-container-0c3a530e stor4

infra1-nova-console-container-86f55a54 infra2-nova-api-os-compute-container-1eea962e infra3-neutron-agents-container-baf9fc93

infra1-nova-scheduler-container-b84c323a infra2-nova-api-placement-container-5cc21228 infra3-neutron-server-container-357febaa

root@infra4-rsyslog-container-eeff7432:/var/log/log-storage#

9.2 Notes

- Add to /etc/hosts the VIP of openstack on all servers

- If you encounter the error below:

‘rb’)\nIOError: [Errno 2] No such file or directory: ‘/var/www/repo/pools/ubuntu-16.04-x86_64/ldappool/ldappool-2.1.0-py2.py3-none-any.whl’“, “stdout”: “Collecting aodh from

Enter the repo container that generate the error and run the commands below:

cd /var/www/repo/

mkdir pools/ubuntu-16.04-x86_64

cp -r -p -v ubuntu-16.04-x86_64/* pools/ubuntu-16.04-x86_64/

cd pools/

chown nginx.www-data * -R

9.3 - How to restart all containers

lxc-system-manage system-rebuild

OSA is pretty robust to deploy in production and AiO environments. Considering the how complex an OpenStack deploy can be, they did a good job and it is easy to investigate issues since all the logs are in a single place.